This blog post is a continuation of the comparison between DbC and TDD that started with a dedicated look at code specification and covered other aspects in part 1 and part 2. It takes a look at further points and shows the characteristics (commonalities and differences) of TDD and DbC towards these aspects.

Universality

One of the most important differences between DbC and TDD is their different universality, because it influences expressiveness as well as verifiability of correctness.

A significant limitation (or better characteristic) of TDD is that tests are example-driven. With a normal manually written unit test you provide exemplaric input data and check for certain expected output values after the test run. Thus you have concrete pairs of input/output values to express a certain feature/behavior that you want to test. Since these values are only examples there has to be a process of finding relevant examples which can be very difficult. One step towards correctness is high code coverage. You should find examples that examine all paths of your code components. That can be a mess since the amount of possible code paths grows exponentially with the count of branches (if, switch/case, throw, …). But even if you find examples that give you 100% code coverage you cannot be sure that the component acts right for all possible input values. On the one side there could be hardware-dependent behavior (e.g. with arithmetic calculations) that leads to differences or exceptions (division-by-zero, underflow/overflow etc.). On the other side perhaps you get 100% code coverage for your components during the TDD process, but this doesn’t help much on integration with other code components or external systems that could act in complex ways. This problem goes beyond TDD. Thus unit tests in a TDD fashion are nice by their flexibility and simplicity, but they cannot give you full confidence. Solutions like Pex can help you here, but this is part of another story (that I hope to come up with in the near future).

DbC on the other side introduces universal contracts that must hold for every value that an object as part of a contract can take. That’s an important aspect to ensure correct behavior in all possible cases. Thus contracts have a higher value in terms of universality than tests (but they fall back in other terms).

Expressiveness

The expressiveness of a component’s behavior and qualities as part of its specification is an important aspect since you want to be able to express arbitrary properties in a flexible and easy way. Universality is one part of expressiveness and has just been discussed. Now let’s look at expressiveness on a broader scope.

TDD has a high value on expressiveness besides the exemplaric nature of tests. You are free to define tests which express any desired behavior of a component that can be written in code. With TDD you have full flexibility, but you are also responsible to get this flexibility under your control (clear processes should be what you need). One aspect that goes beyond the scope of TDD is interaction/collaborative testing and integration testing. The TDD process implies the design of code components in isolation, but it doesn’t guide you in testing the interactions between components (and specification of behavior which relies on those interactions). TDD is about unit testing, but there is a universe beyond that.

Their universal nature makes contracts in terms of DbC a valuable tool. And moreover by extending the definition of a code element they improve the expressiveness of these code elements, what’s great e.g. for the role of interfaces and for intention revealing. But they have downsides as well. First they are tightly bound to a certain code element and are not able to express behavior that spans several components (e.g. workflows and classes interacting together). And second they have a lack of what I would call contentual expressiveness. With contracts you are able to define expectations with arbitrary boolean expressions and that’s a great thing. But it’s also a limitation. For example if you have an algorithm or complex business operation then it’s difficult to impossible to define all expected outcomes of this code as universal boolean postcondition. In fact this would lead to full functional specification which implies a duplication of the algorithm logic itself (in imperative programming) and this would make no sense! On the other side if you use example-driven tests you would not have a problem since you should know what values to expect on a certain input. Furthermore no side effects are allowed inside of a contract. Hence if you use a method to define a more complex expectation this method must be pure (= free of visible side effects). The background of this constraint is that contracts mustn’t influence the behavior of the core logic itself. It would be a mess if there would be a different behavior depending on the activation state (enabled/disabled) of contracts (e.g. for different build configurations). This has a limitation if you want to define certain qualitites with contracts like x=pop(push(x)) for a stack implementation, but it has advantages as well, since it leads to the enforcement of command-query separation by contracts.

Checking correctness

Of course you want to be able to express as many behaviors as possible to improve the specification of your code components. But expressiveness is not leading anywhere when it’s impossible to figure out if your components follow the defined specification. You must be able to stress your components against their specification in a reproducible way to ensure correct behavior!

With TDD correctness of the defined behavior (= tests) can be checked by actually running the tests and validating expected values against the actual values as outcome of a test run. The system-under-test is seen as blackbox and behavior correctness is tested by writing values to the input channels of the blackbox and observe the output channels for correct values. This could include techniques like stubbing or mocking for handling an object in isolation and for ensuring reproducible and verifiable state and behavior. This testability and reproducibility in conjunction with a well implemented test harness is important for continuously checking correctness of your code through regression testing. It’s invaluable when performing continuous integration and when code is changed, but this aspect will be covered in the next blog post. However the exemplaric nature of tests is a limitation for checking correctness which has been shown above when discussing universality.

At first DbC as principle doesn’t help you in terms of checking correctness. It introduces a fail-fast strategy (if a contract is not met, it’s a bug – so fail fast and hard, because the developer has to fix the bug), but how can you verify the correctness of your implementation? With DbC bugs should be found in the debugging step when developing code, thus by actually executing the code. And common solutions like Code Contracts for .NET come with a runtime checking component that checks the satisfaction of the defined contracts when running the code. But this solution has a serious shortcoming: It relies on the current execution context of your code and hence it takes the current values for checking the contracts. With this you get the same problems as with tests. Your contracts are stressed by example and even worse you have no possibility to reproducible check your contracts! Thus dynamic checking without the usage of tests makes no sense. However contracts are a great complement of tests. They specify the conditions that must apply in general and thus a test as client of a component can satisfy the preconditions and then validate postconditions or custom test behavior. Another interesting possibility to verify contracts is static checking. Code Contracts come with a static checker as well that verifies the defined contracts at compile-time without executing the code. On the contrary it actually inspects the code (gathers facts about it) and matches the facts as abstraction of the implementation against the defined contracts. This form of whitebox code inspection is done by the abstract interpretation algorithm (there are other solutions like Spec# that do real formal program verification). The advantage of static checking is that it’s able to find all possible contract violations, independent of any current values. But static checking is hard. On the one side it’s hard for the CPU to gather information about the code and to verify contracts. Hence static checking is very time-consumptive which is intolerable especially for bigger code bases. On the other side it’s hard for a developer to satisfy the static checker. To work properly static checking needs the existence of the right (!) contracts on all used components (e.g. external libraries) which is often not the case. And looking at the static checker from Code Contracts it seems to be too limited at the current development state. Many contracts cannot be verified or it’s too impractical to define contracts that satisfy the checker. Thus static checkers are a great idea to universally verify contracts, but especially in the .NET world the limitations of the checkers make them impractical for most projects. Hence a valid strategy for today is to write tests in conjunction with contracts and to validate postconditions and invariants with appropriate tests.

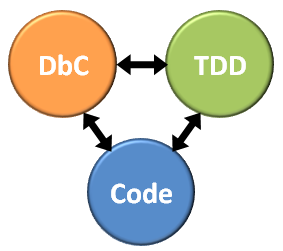

To say first, Test-Driven Development (TDD) and Design by Contract (DbC) have very similar aims. Both concepts want to improve software quality by providing a set of conceptual building blocks that allow you to prevent bugs before making them. Both have impact on the design or development process of software elements. But both have their own characteristics and are different in achieving the purpose of better software. It’s necessary to understand commonalities and differences in order to take advantage of both principles in conjunction.

To say first, Test-Driven Development (TDD) and Design by Contract (DbC) have very similar aims. Both concepts want to improve software quality by providing a set of conceptual building blocks that allow you to prevent bugs before making them. Both have impact on the design or development process of software elements. But both have their own characteristics and are different in achieving the purpose of better software. It’s necessary to understand commonalities and differences in order to take advantage of both principles in conjunction.

In essence, DbC and TDD are about specification of code elements, thus expressing the shape and behavior of components. Thereby they are extending the specification possibilities that are given by a programming language like C#. Of course such basic code-based specification is very important, since it allows you to express the overall behavior and constraints of your program. You write interfaces, classes with methods and properties, provide visibility and access constraints. Furthermore the programming language gives you possibilities like automatic boxing/unboxing, datatype safety, co-/contravariance and more, depending on the language that you use. Moreover the language compiler acts as safety net for you and ensures correct syntax.

In essence, DbC and TDD are about specification of code elements, thus expressing the shape and behavior of components. Thereby they are extending the specification possibilities that are given by a programming language like C#. Of course such basic code-based specification is very important, since it allows you to express the overall behavior and constraints of your program. You write interfaces, classes with methods and properties, provide visibility and access constraints. Furthermore the programming language gives you possibilities like automatic boxing/unboxing, datatype safety, co-/contravariance and more, depending on the language that you use. Moreover the language compiler acts as safety net for you and ensures correct syntax.